By now, Generative AI is old news. CEOs, CMOs, business owners and managers are all wondering how they can incorporate AI into products and work routines to make lives easier, generate more revenue and create better decisions with less effort.

The Importance of Failing Fast

Within a product-minded organization, failing fast and validating our ideas with real users is key to lean product delivery. Demoing a low-fidelity prototype to users and stakeholders can provide just enough information on specific features and experiences so that we can get feedback early on for product market fit and technical feasibility (at a high-level). Often, it is key to early detection of potential problems or misalignments with leadership – which saves time and money in the long run by avoiding costly rework and ensuring the product meets business requirements.

Generative AI Enters the Chat Room

Let’s be honest. Training and deploying products utilizing an LLM can be time consuming (and expensive). It depends on factors such as the size and complexity of the model as well as the computational resources that are available. A team can utilize a pre-trained LLM for a head start however, if your product requires personalization based on a particular industry, use-case, or user base you may spend a significant amount of time – a few weeks or several months – fine-tuning your LLM to meet your outcomes.

So how does rapid prototyping and Generative AI work together in this new world of ours? How do we design and build with just enough fine-tuning in support of valuable features and user-friendly UI?

Fake It till You Make It … Into Generative AI

Product designers come with a whole slew of tricks up their sleeves when validating concepts with users and stakeholders. My favorite of these is something called the Wizard-of-Oz technique.

This technique involves manually controlling the “AI” behind the scenes. The product designer acts as the hidden operator, providing predefined responses based on user input, creating the illusion of an intelligent interaction.

Once basic interfaces are designed for users to interact with, a set of potential responses are prepared for different user prompts aka “Golden Utterances” or actions. The Product Designer will provide the response in real-time – mimicking the behavior of an AI. They can do this through text chat, voice interaction or manipulating visual elements based on what the user does.

Side note: Many tools offer features to support this technique. For example, Figma has the capability to make real-time changes to a prototype as the user interacts with specific hotspots.

Fine-Tuning Your Wizard’s Responses

In support of the Wizard-Of-Oz technique, a Product Designer or Content Strategist should put some thought into how the AI would respond and what it might say when. Utilizing the following techniques are quick exercises to think through and iterate on how to shape the LLM. The goal is to prioritize creating a natural and engaging user experience, even if the interaction is not truly driven by AI. Through each iteration, insights can be fed to the AI team to further tweak their LLM.

Pre-Scripted Interactions

Based on the specific user journey being validated, create a script or flowchart outlining the possible written, verbal or action oriented interactions between the user and the “AI.” This script defines the responses and outcomes based on user input, simulating a basic level of intelligence. The advantages to this activity is it allows for quick feedback for a linear interaction with limited complexity. However, the more complex the user journey – the more complex the script. I recommend utilizing an Agile mindset and focusing on the smallest and most meaningful chunk of experience that produces value.

Random Response Generation

A pre-scripted interaction can be further refined by generating a pool of pre-written responses and randomly selecting them based on user input or prompt. This creates a sense of variation and avoids repetitive interactions when testing the flow with your users – something you should be accounting for as a risk to the experience. Doing so may create insights on tone, level of detail needed and usability.

Validating The Data Within Your Responses

LLM responses and prototype experiences are closely tied to the relevance and value of data. Presenting statistics or facts sometimes may be considered “eye-candy” if not relevant to the user journey. The following activities may help you find opportunities to reduce training time by utilizing a smaller dataset or simpler model architecture. This will create efficiencies, however, it’s important to keep your users in mind. A generalized LLM may not meet your specialized user goals in all cases. Work with your Product team to understand where corners can be cut and where extra data or architecture refinement is needed.

User Interviews and Research

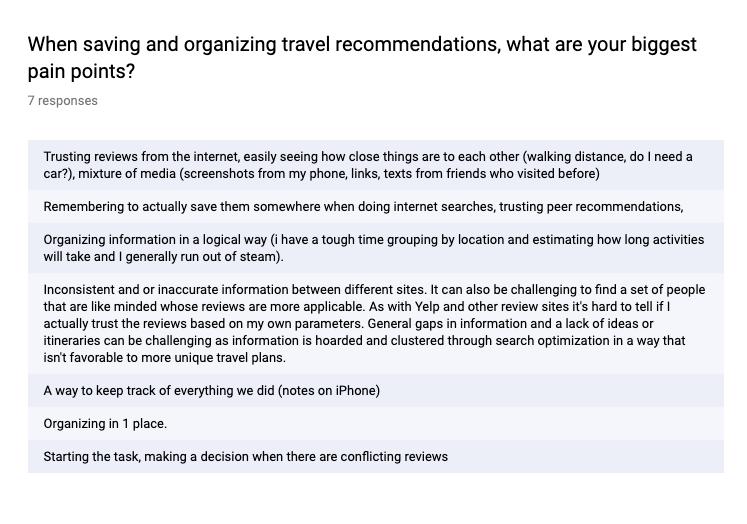

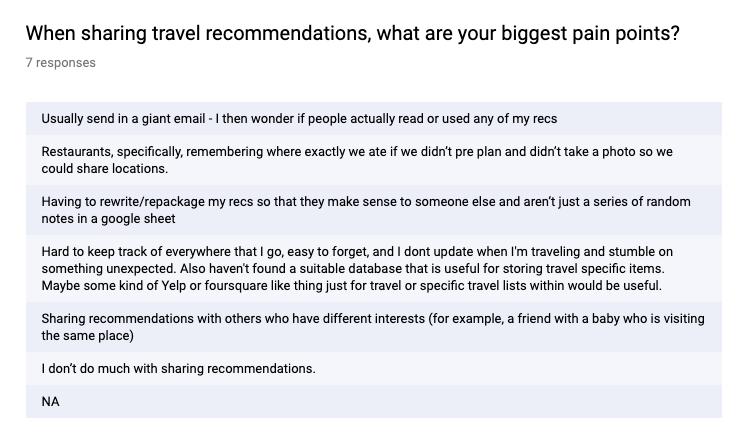

One effective way to validate the value of data is to conduct user interviews. By talking to potential users of your product or service, you can get a better understanding of their needs and pain points. This information can then be used to evaluate whether the data you are collecting is relevant and useful to your users. These example questions can help you understand what would make the most impact to your users:

- What are your biggest challenges or pain points related to the problem your product or service is trying to solve or their particular user journey?

- What kind of data would be most helpful to you in overcoming these challenges or pain points?

- What tools do you currently use to help alleviate some of your current challenges?

- How would you use this data in your daily work or life?

In addition to user interviews, there are other methods you can use to validate the value of your data. These include:

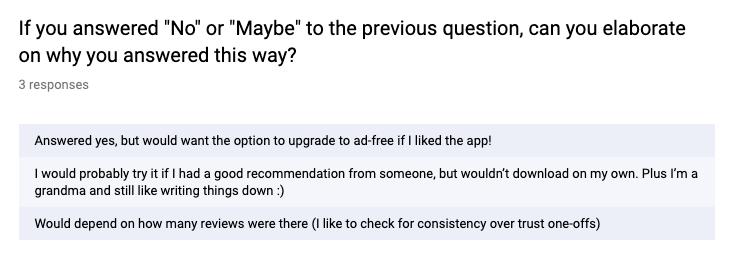

- Conducting surveys or polls to gather feedback from a larger group of people

- Analyzing existing data to identify trends and patterns

Card Sorting

Card sorting is a useful technique when building an LLM prototype to gather feedback on the organization and labeling of the product or generated responses. In respect to their user journey, asking users to sort cards representing different features or generated responses into categories, you can gain insights into how users perceive and understand the product’s structure. By understanding how users perceive and organize information, you can make informed design decisions that enhance the usability, efficiency, and overall effectiveness of your product. Below are some specific ways card sorting can benefit LLM prototyping:

Identifying Natural Categories

Card sorting can help you identify the most natural and intuitive way to organize the different features, data points and interactions of your LLM prototype. This can help you guide user’s based on their way of thinking.

Uncovering Hidden Relationships

Card sorting can also help you uncover hidden relationships between different features and interactions.

Label Clarity

Card sorting can also be used to get feedback on the labeling of your responses. By observing how users categorize data points, you can identify labels that are ambiguous, misleading, or insufficiently descriptive so that the labels are clear and reduce confusion.

Label Consistency

Card sorting can help ensure consistency in labeling by identifying instances where different users assign different labels to the same data points. This feedback can be used to develop a standardized labeling system that minimizes variability and helps to reduce the overall burden of training the LLM.

Iterate On Your Techniques

AI and new technology will continue to disrupt how we build products far into the future. We will need to sharpen our product mindset tools and continue to evolve into the future. When building new features and products, Agile delivery and Lean Discovery techniques help teams avoid waste and validate that the product they are building is the right one.